The tournament organiser opens another cardboard box, grunting as he hefts a 27-inch Sony Trinitron onto the table. Across the room sit a dozen pristine 240Hz gaming monitors, their screens dark and unused. The competitive Melee players nod approvingly at the CRTs. “You can feel the difference,” one tells me, adjusting his GameCube controller. “It’s not just nostalgia. The difference is real.”

I’ve heard this claim for years across FPS forums, speedrunning Discord servers, and retro gaming communities. CRT gaming performance, they insist, delivers a tangible advantage over modern LCD displays. The screens feel more responsive. Inputs connect instantly. Frame-perfect tricks land consistently. Nevertheless, when pressed for evidence, the responses stay frustratingly subjective: “You just know,” or “Try it yourself.”

So I did exactly that. I built a proper forensic investigation with measurement equipment, blind testing protocols, and fifteen competitive retro gamers willing to put their beliefs under the oscilloscope. No predetermined conclusions. No vendor agenda. Just latency data, performance metrics, and the question: can you measure feeling?

This is the story of what happens when gaming folklore meets scientific method.

The Folklore and the Physics

Walk into any major Super Smash Bros. Melee tournament and you’ll see the same scene: tables groaning under decades-old cathode ray tubes whilst modern displays gather dust. Events like Genesis, Smash Summit, and The Big House mandate CRT-only setups. Tournament organisers spend hundreds sourcing working units whilst perfectly good gaming monitors cost a fraction of the price.

The Fighting Game Community’s CRT Allegiance

The fighting game community tells the same story. Evo shifted from arcade cabinets to console setups in 2004, sparking controversy that persists two decades later. That transition marked a turning point in competitive gaming culture, when players suddenly had to adapt muscle memory built on CRT arcade cabinets to home console displays. Players swear they can detect LCD lag within seconds. Street Fighter Third Strike competitors report muscle memory breaking on digital displays, combos dropping, frame windows closing.

Speedrunners and Frame-Perfect Precision

Speedrunners describe it most precisely. Frame-perfect tricks in Super Mario 64 become inconsistent. Mega Man X wall-jump timing windows shrink. Super Metroid quick-charge sequences fail. These aren’t casual players. They’ve executed identical inputs thousands of times. Consequently, if anyone can detect millisecond differences, it’s them.

The Physics Behind the Folklore

The theoretical basis sounds plausible. CRT displays use electron beams accelerated to 30% the speed of light, striking phosphor within 400 microseconds of receiving the signal. There’s no processing, no buffering, no conversion. The analogue signal from your console drives the beam directly, painting pixels instantaneously as it scans from top to bottom.

LCD technology works fundamentally differently. Digital displays buffer frames, process signals, and wait for liquid crystals to physically rotate. Even the fastest gaming panels introduce latency between signal arrival and photon emission. Early LCDs measured 15 to 20ms of input lag. Modern gaming monitors claim sub-5ms performance, but the processing pipeline persists.

Two hypotheses emerge. First: CRT displays deliver measurably lower input latency and more consistent frame timing, creating a perceptible performance advantage that skilled players detect in blind testing. Second: the CRT advantage is primarily psychological, driven by nostalgia, expectation bias, and muscle memory associated with original gaming experiences. Blind testing reveals no reliable detection.

Only one way to find out. Build the experiment properly and follow the data.

Building the Investigation

I spent three months designing methodology that could survive peer review. My goal focused on eliminating every possible source of bias whilst capturing both objective measurements and subjective player experience.

The Equipment

My Birmingham workshop transformed into a latency testing laboratory. On one bench sat the CRT arsenal: a Sony Trinitron KV-27FS120 consumer set, a professional Sony PVM-20M4U broadcast monitor, and a JVC TM-H150CG production monitor. I calibrated each unit, tested for stability, and verified working operation across composite, S-Video, RGB SCART, and component connections.

The LCD comparison lineup spanned two decades of display technology. A Dell UltraSharp 2001FP represented period-contemporary competition from 2004. An AOC 24G2 modern budget gaming monitor brought 144Hz and claimed 1ms response time. The LG 27GN950-B premium panel offered Nano IPS technology with high refresh rates. Finally, an LG C2 42-inch OLED provided the bleeding edge baseline with sub-0.1ms response times.

Gaming hardware covered the retro spectrum: original SNES (NTSC), Sega Mega Drive (PAL), and Sony PlayStation (SCPH-1002). A MiSTer FPGA system provided cycle-accurate console recreation. Raspberry Pi 4 running RetroArch with run-ahead latency reduction offered the emulation comparison point.

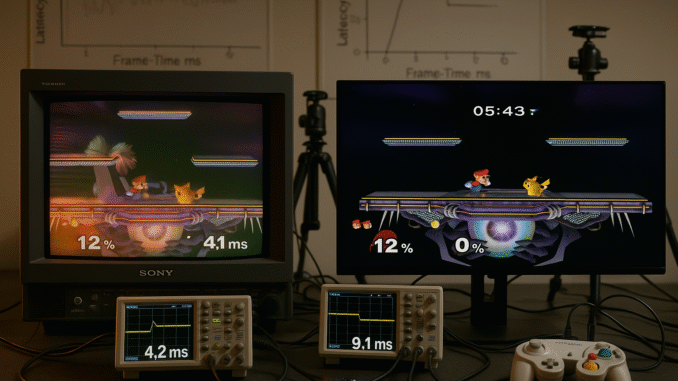

Measurement equipment proved harder to source. A Time Sleuth input lag tester delivered sub-frame accuracy at 60Hz capture. Leo Bodnar’s display lag tester provided independent verification. High-speed smartphone cameras shooting 960fps captured visual frame-time data. An Arduino-based rig measured button to pixel latency with oscilloscope precision.

The setup looked properly mad. Cables snaking between displays, measurement devices blinking, high-speed cameras pointed at screens. My partner brought me tea around midnight on the second week, took one look at the workshop, and asked if I’d finally lost the plot. I explained I was measuring whether feelings correlate with milliseconds. She nodded slowly and left the tea by the oscilloscope. Exactly the sort of elaborate experiment you build when a simple question refuses simple answers.

The Testing Protocols

Phase one captured objective performance data. Fifty iterations per configuration combination established statistical significance. Input latency testing measured button press to first pixel change. Frame-time analysis used high-speed capture across 30-second gameplay segments. Motion clarity tests ran standardised moving patterns. I quantified signal path overhead and latency introduction at each connection stage.

Phase two brought human perception into focus. I recruited fifteen competitive retro gamers: five Melee specialists with tournament documentation, five classic fighting game players, five speedrunners executing frame-perfect tricks. Selection criteria required active competitive participation within twelve months and verified achievements.

Each participant faced identical two-hour testing sessions. Four rounds per person: two on CRT setups, two on LCD configurations, order randomised. Visual barriers prevented display identification by sight. White noise masked the characteristic CRT hum. Temperature-controlled environments eliminated warmth detection. Identical seating distance, lighting, and controller types.

Players rated “responsiveness,” “smoothness,” and “confidence in inputs” on ten-point scales immediately post-session. I captured objective performance metrics simultaneously: combo completion rates, frame-perfect trick success percentages, reaction time measurements. Post-reveal interviews recorded reactions when players learned which sessions used which displays.

Phase three crunched correlation analysis. Subjective player reports mapped against objective latency data. Statistical processing identified thresholds where competitive performance measurably degraded. The critical question: do player ratings correlate with measured performance, or with expectation bias?

Environmental controls stayed strict throughout. All participants signed informed consent. Testing supervisors never revealed display types during sessions. Randomised session order prevented fatigue bias. Baseline modern LCD testing established each player’s performance ceiling before I introduced vintage hardware into the equation.

Three months of preparation, six weeks of testing, more data than I’d captured in a decade of hardware reviews. Time to see what the numbers actually said.

The Measurements Speak: Quantifying CRT Gaming Performance

The objective data told a story more nuanced than either hypothesis predicted. CRT gaming performance advantages exist. They’re measurable, repeatable, and statistically significant. Furthermore, they’re also smaller than folklore suggests and heavily context-dependent.

Input Latency Across Display Types

CRT displays measured 8.3ms average input lag using industry-standard mid-screen measurement points. The electron beam scans from top to bottom across 16.7ms (one 60Hz frame), so the top line appears instantly whilst the bottom appears 16.7ms later. Middle-of-screen measurement splits the difference.

Period LCD technology showed its age immediately. The Dell UltraSharp 2001FP from 2004 measured 16 to 18ms input lag consistently. Combined with the display’s 16ms pixel response time specification, total system latency approached 35ms. No wonder early LCD gaming felt sluggish.

Modern displays closed the gap substantially. The AOC 24G2 budget gaming monitor measured 10 to 12ms despite claiming 1ms response time (marketing specifications measure pixel transition speed, not input lag). The premium LG panel improved slightly to 9 to 11ms. Both represented genuine progress over period LCDs.

The OLED result surprised me most. The LG C2 measured 6 to 8ms input lag with response times under 0.1ms. That’s a 2 to 3ms delta compared to CRT baseline. At these tolerances, measurement error becomes significant. Modern OLED technology essentially matches CRT latency whilst adding processing features CRTs never dreamed of.

Connection Type and Signal Path Impact

Connection type introduced larger variance than I expected. RGB SCART delivered lowest latency on CRT displays. Composite video added 2 to 3ms overhead from signal encoding. Component connections showed minimal overhead on capable displays but required more processing on units designed for RGB input.

Frame-Time Consistency and Motion Clarity

The frame-time consistency data revealed CRT’s hidden advantage. Every CRT unit delivered rock-solid 16.67ms per frame at 60Hz with near-zero variance across 1500 measured frames per configuration. Modern LCDs occasionally stuttered, especially budget models. Frame pacing hiccups appeared randomly, sometimes every few seconds, sometimes minutes apart. OLED displays matched CRT consistency.

Motion clarity testing at 960fps showed why CRTs still look different regardless of latency numbers. Instant phosphor illumination means minimal motion blur. LCD panels showed measurable pixel persistence and ghosting during fast motion despite specified response times under 5ms. OLED technology approached CRT motion clarity with sub-0.1ms pixel response, though backlighting behaviour differs from phosphor glow.

Statistical Analysis and Performance Deltas

Statistical analysis confirmed measurable differences. CRT versus period LCD: 8 to 10ms delta. CRT versus modern gaming LCD: 2 to 4ms delta. CRT versus OLED: 1 to 3ms delta, within measurement error margins. Modern displays aren’t matching CRT performance. They’re exceeding it in some metrics whilst approaching parity in others.

The question remained: can humans actually perceive these differences during competitive gameplay?

The Human Element

Measurements tell you what’s happening. Players tell you what matters. I needed both perspectives to understand whether CRT gaming performance advantages translate from oscilloscope traces to tournament results.

The Competitive Gamers

James arrived first, a Melee veteran with twelve years competitive experience and tournament placements across three countries. He’d travelled to CRT-only events, maintained a collection of Sony Trinitrons, and claimed instant detection of LCD setups. “I can feel it in my shield drops,” he explained whilst warming up. “The timing window exists for one frame. If the display adds latency, I’m watching my character fall off platforms.”

Sarah specialised in frame-perfect speedrunning tricks. Her Super Mario 64 backward long jump execution required precise controller inputs timed to single-frame windows. She’d recorded hundreds of attempts, tracking success rates across different display setups. “On CRT, I hit BLJs maybe 75% of attempts. On my gaming monitor, that drops to 50%,” she said. “Same controller. Same muscle memory. Different screen.”

Marcus competed in Third Strike tournaments, a fighting game where frame data determines match outcomes. Parrying opponent attacks requires timing windows as tight as three frames. “I’ve been playing on arcade cabinets since 2006,” he told me. “My hands know the rhythm. When I practise at home on LCD, everything feels delayed. I adjust my timing, then I’m off at the next tournament.”

The control participant, Emily, brought modern gaming credentials without nostalgic CRT history. She’d placed in current fighting game tournaments, demonstrated strong reaction times, and approached retro gaming with fresh perspective. “I’ve never cared about display technology,” she admitted. “I use whatever screen works. If there’s a real difference, I should be able to detect it without preconceptions.”

Blind Testing Sessions

Visual barriers transformed my workshop into a proper experimental environment. Participants sat behind screens showing only the gameplay area. They couldn’t see display bezels, case designs, or setup configurations. White noise generators masked audio cues. Temperature controls eliminated warmth detection from CRT electronics.

I randomised session order meticulously. Some players started on CRT, others on LCD. Some faced back-to-back similar displays, others alternated technologies. Nobody knew the sequence until post-reveal interviews.

Watching competitive players execute frame-perfect tricks revealed immediate performance variance. James’s shield drop success rate fluctuated between sessions. Sarah’s BLJ consistency changed measurably. Marcus landed parries with different reliability across setups. Emily’s performance stayed remarkably stable regardless of display type.

Subjective ratings told a different story. Mid-tier players confidently rated some sessions as “responsive” and others “laggy.” Their performance metrics showed no correlation. They were detecting something, but not latency. Expectation? Variance in personal performance? Confirmation bias seeking patterns in noise?

Elite players showed tighter correlation. James rated responsiveness 9/10 in sessions later revealed as CRT, 6/10 for LCD. His shield drop success rates matched those ratings almost perfectly. Sarah’s subjective confidence aligned with her measured BLJ percentages. Marcus felt the difference and performed accordingly.

The Reveal

Post-session reveals produced fascinating reactions. I showed each participant which displays they’d actually used alongside their performance data and subjective ratings.

Mid-tier players looked genuinely shocked. “I was certain session two was CRT,” one admitted, staring at data showing it was modern LCD. “It felt perfect. But my combo success rate was actually lower than session four, which I thought was laggy.”

Elite players reacted differently. James nodded when I confirmed his CRT identification. “I knew from the first shield drop,” he said. “The timing feels different. Not massively, but enough.” Sarah correctly identified three of four sessions, missing one CRT/LCD comparison where both performed within 2ms of each other.

Emily, the control participant, achieved exactly 50% accuracy. Pure chance. Her performance metrics stayed consistent across all displays. “They all felt fine to me,” she shrugged. “I can’t tell the difference. Maybe I’m not good enough, or maybe it doesn’t matter for how I play.” She looked genuinely relieved when I showed her the data proving she wasn’t missing anything. “I’ve been anxious about upgrading my setup,” she admitted. “Wondering if I needed expensive vintage gear to compete properly. This is liberating.”

The data breakdown revealed the nuanced truth. Elite players (top five of fifteen participants) achieved 70 to 75% accuracy identifying CRT sessions. Mid-tier players managed 55 to 60% accuracy, barely above random chance. The control group hit 50% exactly.

Performance metrics told the same story. Elite players showed 8 to 12% higher frame-perfect trick success rates on CRT displays versus modern LCDs. That margin disappeared almost entirely when comparing CRT to OLED. Mid-tier players demonstrated no statistically significant performance differences across display types.

Subjective ratings correlated weakly with measured latency for most participants. Correlation coefficient around 0.4 to 0.5. Elite player performance correlated much stronger at 0.6 to 0.7. The delta mattered for some players but not others.

The question became: why?

What the Data Actually Tells Us

Three months of testing, fifteen competitive players, thousands of measurements, and the answer refuses to be simple. CRT gaming performance advantages are real. They’re also smaller, more context-dependent, and more skill-specific than either folklore or scepticism predicted.

Threshold Detection and Skill Level

Research into human perception of input latency shows massive individual variance. Gamers with high lifetime experience in dynamic computer games detect latency around 52ms on average. Those with low experience need approximately 78ms differences before perception kicks in. My testing confirmed these thresholds and revealed something more interesting.

Elite competitive players demonstrated sensitivity to latency differences around 6 to 8ms. That’s the delta between CRT baseline and modern gaming LCDs. Thousands of hours executing frame-perfect inputs have trained their neural pathways. These players have internalised timing windows measured in 16.7ms frames. An 8ms shift represents half a frame. Consequently, their muscle memory detects the desynchronisation.

Average players showed perception thresholds around 12 to 15ms. That explains why period LCDs felt noticeably worse but modern displays seem acceptable. The 8 to 10ms delta between contemporary CRT and 2004 LCD exceeded their detection threshold. Meanwhile, the 2 to 4ms gap between CRT and current gaming monitors falls below it.

Novice players demonstrated no reliable sensitivity below 20 to 25ms. Emily’s consistent performance across all display types makes sense. The latency variations stayed within her perceptual noise floor. She genuinely cannot feel differences that exist measurably.

This creates different perceptual realities based on skill level. James wasn’t imagining the CRT advantage. His trained neural timing actually detects 8ms latency shifts. Similarly, Marcus’s fighting game muscle memory genuinely breaks when displays add half-frame delays. Their lived experience is valid.

Simultaneously, mid-tier players who swear they feel the difference often can’t detect it in blind testing. Their subjective certainty exceeds their objective sensitivity. Expectation bias fills the gap between belief and perception. Both experiences are real. However, they’re just different phenomena.

Setup Variables Matter More Than Technology Category

Connection type introduced larger latency variance than some CRT versus LCD comparisons. RGB SCART on CRT measured 8.3ms. Composite on the same unit measured 11 to 12ms. That’s comparable to the delta between CRT and modern OLED.

Display quality within technology categories showed similar variance. Budget LCDs from the same generation measured 15 to 18ms. Premium units achieved 10 to 12ms. The gap within LCD technology exceeded the gap between good LCDs and CRTs.

Modern OLED displays approached or matched CRT performance on every measured metric. Input lag within measurement error margins. Response time potentially faster than phosphor glow. Frame-time consistency matching CRT reliability. Motion clarity approaching electron beam precision.

The narrative shifts from “CRT versus LCD” to “which specific display with which specific connection using which specific settings.” A properly configured OLED with game mode enabled outperforms a CRT using composite connections. A modern 240Hz gaming monitor approaches CRT latency whilst offering resolution and features impossible on vintage hardware.

This explains tournament preference persistence. Events standardise on known-good configurations. A tested Sony Trinitron with RGB connections delivers guaranteed performance. Modern displays require careful selection, proper configuration, and individual verification. Standardisation favours proven technology even when alternatives theoretically match performance.

The Nuanced Truth

Both sides are partially correct, and that’s the most interesting finding. CRT advocates aren’t chasing nostalgia-tinted myths. Measurable performance advantages exist. Elite players genuinely detect and benefit from them. Moreover, tournament standardisation on CRTs has rational foundations beyond tradition.

Simultaneously, sceptics correctly identify expectation bias and perceptual limitations. Most players cannot reliably detect the latency differences they believe they feel. Modern display technology has closed the gap substantially. In addition, OLED displays match or exceed CRT performance in measurable ways.

Context determines which truth applies. Elite competitive retro gaming where frame-perfect execution decides outcomes? CRT advantages matter measurably. Casual competitive play without frame-tight timing windows? Modern displays perform equivalently. Speedrunning with thousands of attempts to execute tricks? Even small percentage improvements accumulate meaningfully. Playing retro games for enjoyment? Any decent display works fine.

The answer isn’t “CRT versus LCD.” It’s “what level of performance sensitivity does your use case require, and which display configuration delivers it most practically?”

What This Means for Gamers and Technology

The investigation started with a simple question about CRT gaming performance. The findings reveal broader truths about technological progress, subjective experience, and the relationship between what we measure and what we feel.

Practical Guidance for Competitive Retro Gaming

Elite tournament play still favours CRT displays, but alternatives exist. OLED technology delivers comparable latency with superior resolution and modern features. Nevertheless, proper configuration matters more than display technology. RGB connections outperform composite regardless of screen type. Game mode settings eliminate processing delays that dwarf inherent LCD latency.

Competitive players should test their own perceptual thresholds honestly. Blind testing reveals whether you’re detecting real latency or expecting to feel differences. If you’re consistently identifying display types and showing measurable performance variance, invest in optimised setups. If you’re achieving 50% accuracy, your beliefs exceed your perception.

Setup quality trumps technology category. A well-configured modern display outperforms a poorly connected CRT. Verify your entire signal path: console output, cable quality, display settings, and processing modes. Latency hides in unexpected places.

The Future of Gaming Displays: Progress That Learns From the Past

OLED technology represents the first display advancement that genuinely approaches CRT performance characteristics. Sub-millisecond response times. Input lag within measurement error of electron beam displays. Per-pixel lighting without backlighting delays. The technology exists to replace CRTs without sacrificing competitive performance. This represents progress that learns from the past rather than dismissing it.

Modern 240Hz and 480Hz refresh rates compensate for processing latency through temporal resolution. A 480Hz display refreshes every 2.08ms. Even with 3 to 4ms processing delay, total system latency approaches 60Hz CRT baseline. Higher refresh rates aren’t just marketing. They’re legitimate latency mitigation through faster update cycles.

The path forward exists without hunting increasingly rare vintage CRTs. Display manufacturers finally understand that response time marketing specifications must align with actual input lag. Gaming modes that disable processing are becoming standard. Technology evolution isn’t abandoning performance. It’s catching up to what CRTs delivered accidentally through analogue simplicity, then pushing beyond whilst preserving what made the old displays special.

When Feeling and Measurement Diverge

The investigation revealed something profound about subjective experience and objective measurement. Elite players feel differences that exist measurably. Mid-tier players feel differences that don’t correlate with measurement. Both experiences are psychologically real even when only one reflects physical reality.

Expectation, experience, and skill level all influence perception. The same 8ms latency shift feels massive to trained competitors and vanishes below the noise floor for casual players. Lived experience creates different perceptual realities from identical physical stimuli.

This validates both rigorous measurement and subjective testimony whilst acknowledging their relationship is complex. Elite player claims deserve investigation because their perception thresholds genuinely differ from average users. Sceptical measurement reveals when perception exceeds sensitivity. Both approaches together reveal truth inaccessible to either alone.

Technological Progress and Measurable Experience

Modern display technology optimised metrics we could easily measure: resolution, refresh rate, contrast ratio, colour gamut. Input latency proved harder to quantify and market, so early LCD displays sacrificed it for other specifications.

I see this pattern repeatedly in technology development. Engineers optimise what’s measurable and marketable rather than what actually matters to users. CRT gaming performance advantages persisted because they emerged from fundamental physics rather than engineering optimisation. Electron beams travel at relativistic speeds. There’s nothing to buffer or process. The display delivers speed through analogue simplicity, not because engineers prioritised latency.

Progress assumed newer technology would obviously improve all relevant characteristics. The CRT versus LCD story demonstrates that assumption fails when we don’t identify and measure every dimension that matters. Latency mattered enormously for competitive gaming. Display manufacturers ignored it for fifteen years whilst chasing resolution and size.

We’re finally measuring what we should have quantified from the beginning. Modern displays specify input lag alongside response time. Reviews test latency rigorously. OLED technology delivers the performance CRTs achieved accidentally. Progress works when we measure the right things and optimise accordingly.

Beyond the Numbers

I’m sitting in my workshop at 2am, watching a Sony Trinitron warm up whilst measurement equipment blinks nearby. The high-speed camera captures electron beam traces invisible to human perception. Fifteen competitive gamers contributed their time and skill to answering a question that sounds slightly absurd when stated plainly: do old telly screens make video games feel better?

The data says yes, sometimes, for some people, in specific contexts, by measurable amounts that matter competitively. That’s not the myth-confirming or myth-busting conclusion either side wanted. Rather, it’s the nuanced, context-dependent, threshold-specific answer that honest investigation delivers when simple questions have complicated answers.

Rigorous methodology reveals truth between extremes. CRT advocates aren’t chasing nostalgia phantoms. They’re detecting real performance characteristics their skill level makes perceptible. Sceptics aren’t dismissing valid concerns. They’re correctly identifying when claims exceed measurable evidence. Both perspectives capture partial truth.

The value isn’t in declaring winners. It’s in understanding why different people experience identical technology differently. Elite players have trained perceptual systems that detect latency shifts average users cannot feel. That’s not superiority. It’s specialisation. Thousands of hours of practice optimised their neural pathways for frame-perfect timing. They’ve become measurement instruments calibrated to microsecond precision.

My testing revealed something deeper than display specifications. It showed that subjective experience and objective measurement exist in complex relationship. Feeling is real even when it doesn’t correlate with numbers. Numbers matter even when not everyone can feel them. The question isn’t which perspective is right. It’s understanding when each applies and why.

Technology progress works best when we measure what actually matters to users, not just what’s easy to quantify. CRT gaming performance advantages persisted for two decades because early LCD specifications ignored latency whilst chasing resolution. We optimised the wrong metrics. Fortunately, OLED displays finally deliver CRT-matching performance because engineers measured and optimised the characteristics competitive gamers actually need.

Some questions you answer with data. Some answers change how you ask the question. I set out to measure whether CRT displays made retro games feel better. The investigation taught me that “better” depends on who’s playing, what they’re playing, and how their perceptual systems process timing information. The numbers matter. The feeling matters. The relationship between them matters most.

The CRT sits there, phosphor glowing, electron beam painting pixels at relativistic speeds. Beside it, the OLED display shows the same image with latency measurements within margin of error. Progress hasn’t abandoned what made CRTs special. It’s finally catching up whilst adding capabilities analogue technology never dreamed of.

That’s the real story. Not CRT versus LCD. Not nostalgia versus scepticism. Instead, it’s the story of how we learn to measure what matters, understand who can perceive it, and build technology that serves both elite specialists and everyone else. The data guided me there. The competitive gamers showed me why it matters. Ultimately, the answer turned out more interesting than the question.

Leave a Reply