By Talia Wainwright, Future-Forward Technologist for Netscape Nation

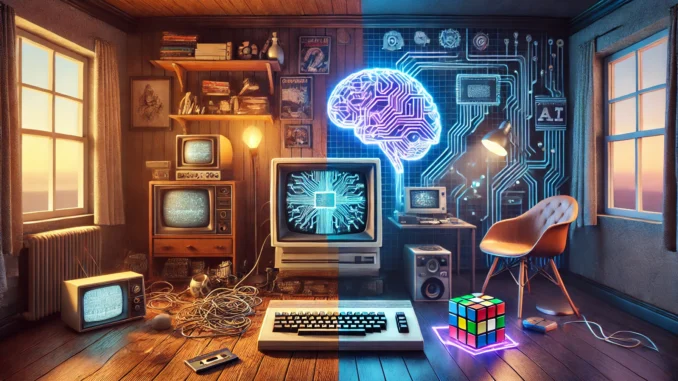

Introduction: Bridging Cassette Noises and Chatbots

If you’re Gen X, you might recall the screech of a cassette loading on an Amstrad CPC or ZX Spectrum, hoping it wouldn’t crash after 10 minutes of excruciating wait time. You remember a world where writing a few lines of BASIC felt revolutionary, and “AI” was something relegated to Hollywood. Think WarGames (1983) with its near-nuclear meltdown, or Knight Rider (1982) and the smooth-talking KITT. Then came The Terminator (1984), warning us how badly things could go if machines got too smart.

At the time, real artificial intelligence (AI) was mostly locked away in academic labs, existing as rudimentary “expert systems” or clunky chatbots. The big leaps were yet to come. Fast-forward a few decades, and we find ourselves in an era of AI-driven chatbots generating essays, code, and even entire songs—just as self-driving cars navigate city streets.

How did we get here? And what does it mean for the generation that once juggled floppy disks and dial-up modems? In this article, we’ll explore how the gritty, do-it-yourself spirit of early home computing shaped Gen X’s perspective on AI—and what’s next for those of us who witnessed its birth firsthand.

The 8-Bit Foundations: A Generation of Tinkerers

In the 1980s, home computers like the Commodore 64 (1.02 MHz MOS 6510 CPU), ZX Spectrum (3.5 MHz Zilog Z80), and BBC Micro (2 MHz 6502) brought computing into living rooms across the UK and beyond. Memory was minimal—16K, 48K, maybe 64K—and yet we devoured every byte. Iconic games such as Jet Set Willy and Elite pushed hardware limits through relentless optimization. Loading screens and beep-laden intros were normal, and if a game crashed mid-level, you might poke around the registers or the code itself to see what went wrong.

Back then, “AI” was more science fiction than science fact, but we saw hints: WarGames teased a thinking computer, even if our 8-bit machines could barely display sprites without flickering. Still, the notion lingered that someday computers might truly “think.”

Community was the bedrock. Local user groups, Bulletin Board Systems (BBS), and type-in listings from magazines gave curious minds a crash course in hardware tinkering. If speech synthesis existed, it was primitive and monotone—yet we marveled anyway. Each beep and glitch was a reminder that technology was an adventure.

Talia’s personal anecdote: “My first ‘AI’ experience was a DOS-based chatbot in the late ’80s. It spat out random phrases masquerading as conversation, but I remember thinking: If this is possible now, imagine what it’ll do in a few decades.”

Despite limited RAM and puny clock speeds, Gen X found ways to create big things in small spaces. That hands-on, scrappy ethos still underpins how we approach emerging tech today.

Between Hype and Winters: AI’s Long Road Through the ’90s and 2000s

By the turn of the ’90s, artificial intelligence faced repeated “winters” of dashed hopes and dried-up funding. True, there were expert systems for niche tasks, rudimentary voice recognition (mostly awful), and chess computers that could occasionally surprise amateurs. But it wasn’t quite the KITT-level intellect pop culture had primed us for.

Meanwhile, Gen X watched the internet blossom from scattered dial-up BBS boards to a bustling, always-on network. These early online communities not only fueled our appetite for software and social connections—they also began producing data on a scale previously unimaginable. Unbeknownst to most, every forum post, every email, and every search query was laying a foundation for machine learning (ML)—the subset of AI that trains computer models using large datasets. Without the data supply from these new digital habits, modern AI might still be stuck in second gear.

At the same time, personal computing soared ahead. We hopped from MS-DOS to Windows 95, from floppy disks to CDs and DVDs, eventually pivoting to cloud storage. Though AI still lurked in the background, the stage was being set. Once hardware caught up and data became abundant, the AI breakthroughs would come fast—and they did, around the mid-2000s, igniting a renaissance that ended those long winters of disappointment.

The Multimedia Leap: How User Experience Laid the Groundwork

Throughout the ’90s, multimedia PCs dazzled home users with CD-ROMs, advanced sound cards, and vibrant graphics. Titles like Myst offered immersive puzzle worlds. Shareware hits like Doom flexed the improved performance of new CPUs. Meanwhile, the Amiga and Atari ST fostered a subculture known as the demoscene—a collective of programmers who pushed 16-bit hardware to create jaw-dropping audiovisual showcases far beyond what anyone thought possible.

This bigger, bolder user experience quietly foreshadowed a future where large-scale data handling was essential. AI researchers took notes: better graphics implied advanced image processing, while improved audio hinted at more robust speech recognition. Though the internet was still finding its footing, the seeds of big data were sprouting. Users like Gen Xers, eager to download music files or scan 16-bit demos, unintentionally tested and expanded network limits—further fueling the data boom AI needed.

Crashes, driver conflicts, and slow modems taught us resilience. If anything, it primed Gen X to endure the inevitable bumps that come with next-gen tech—like advanced machine learning or “intelligent” systems that sometimes misbehave.

AI Today: When the Machines Talk Back

By the 2010s and 2020s, AI finally emerged from obscurity:

- Chatbots (ChatGPT, Claude, Gemini) that simulate conversation and generate text.

- AI coding tools (GitHub Copilot) to accelerate development.

- Machine-generated music & art that challenge our notions of creativity.

It’s almost everything we saw in the ’80s and ’90s, minus the nuclear Armageddon. For those of us who grew up on The Terminator, it’s both thrilling and unnerving. Misinformation, deepfakes, and automation fears loom large—but so do the benefits of systems that can analyze complex data or collaborate on creative tasks.

The question is: who’s really in control? Our 8-bit roots taught us to open the case, look at memory maps, or tweak a line of code to fix a glitch. Today, massive language models or self-driving algorithms operate behind layers of corporate secrecy, making transparency and user agency more complicated. Yet Gen X knows better than to write off new tech just because it seems formidable. We’ve adapted before, and we can do it again—if we remain active participants, not passive bystanders.

Our Gen X Vantage: From BASIC to Brainy Bots

If Boomers are wary and Gen Z finds AI second nature, Gen X stands in a unique middle ground. We recall a life pre-internet and typed BASIC lines on a Spectrum, not because it was easy, but because that was computing at the time. Now, entire essays can be generated with a single prompt.

- We replaced phone books with AI-powered search.

- We swapped typed code for AI autocompletions.

- We learned everything the hard way—so we know how to adapt, pivot, and self-teach.

We’re arguably the best-equipped generation to evaluate AI. We’ve seen memory evolve from kilobytes to terabytes, and we understand that behind every glossy interface is a system—one that humans built, and humans can influence.

Future Outlook: Adapting, Empowering, and Avoiding a Skynet Scenario

Where do we go from here? For a generation raised on both cassette tapes and cloud computing, AI can be a powerful ally—if we stay engaged. It might:

- Replace certain tasks, but create new roles elsewhere.

- Empower us by handling mundane chores, freeing us for innovative work.

- Evolve into something unrecognizable, challenging us with ethical and existential questions.

Having survived yanking jammed floppies, the meltdown of Windows ME, and the pains of 56K modems, Gen X is no stranger to a challenge. The potential of AI is vast, but so are the stakes. Are we ready to guide this technology responsibly?

Conclusion: A Gen X Call to Action

From screeching cassette loads to near-omniscient AI assistants, our journey reveals the astonishing pace of tech evolution. Yet if there’s one abiding lesson from the 8-bit days, it’s that technology doesn’t just happen to us—we shape it. As Gen Xers, we’ve quietly steered digital progress, from hacking on underpowered hardware to grappling with emerging AI wonders.

Now that AI stands at the forefront, we face a pivotal question:

- Do we let AI overshadow human creativity and control?

- Or do we harness it, much like we once bent 8-bit hardware to our will?

We’re the generation that bridged the gap from BASIC to bots. Let’s keep leading by example—staying curious, hands-on, and ensuring technology remains rooted in the people who bring it to life.

An Alternative Perspective from Gary Holloway (Open-Source Evangelist)

“Even as Gen X celebrates AI’s breakthroughs, we can’t ignore the ownership dilemma. Who controls these models—and your data? In the early days, we could tweak a line of code to fix a glitch, but now proprietary AI systems run in server farms we can’t even see. Privacy and independence are at stake. Open-source AI communities could be our best defense, allowing us to host, audit, and improve these tools without ceding control to big corporations. It’s not about stopping progress; it’s about ensuring that as AI grows, its benefits remain in people’s hands—not hidden behind corporate paywalls or locked in walled gardens.”

Leave a Reply